Infrastruktur

Arkitekter

Bygg og anlegg

Entreprenører

Samferdsel

Kraftproduksjon og distribusjon

Fornybar energi

Olje og gass

Telekom

Forsikring

Bank

Detaljhandel

Sikkerhet og beredskap

Ideelle organisasjoner

Myndigheter

Kommune og fylkeskommune

Slik har vi hjulpet kundene våre

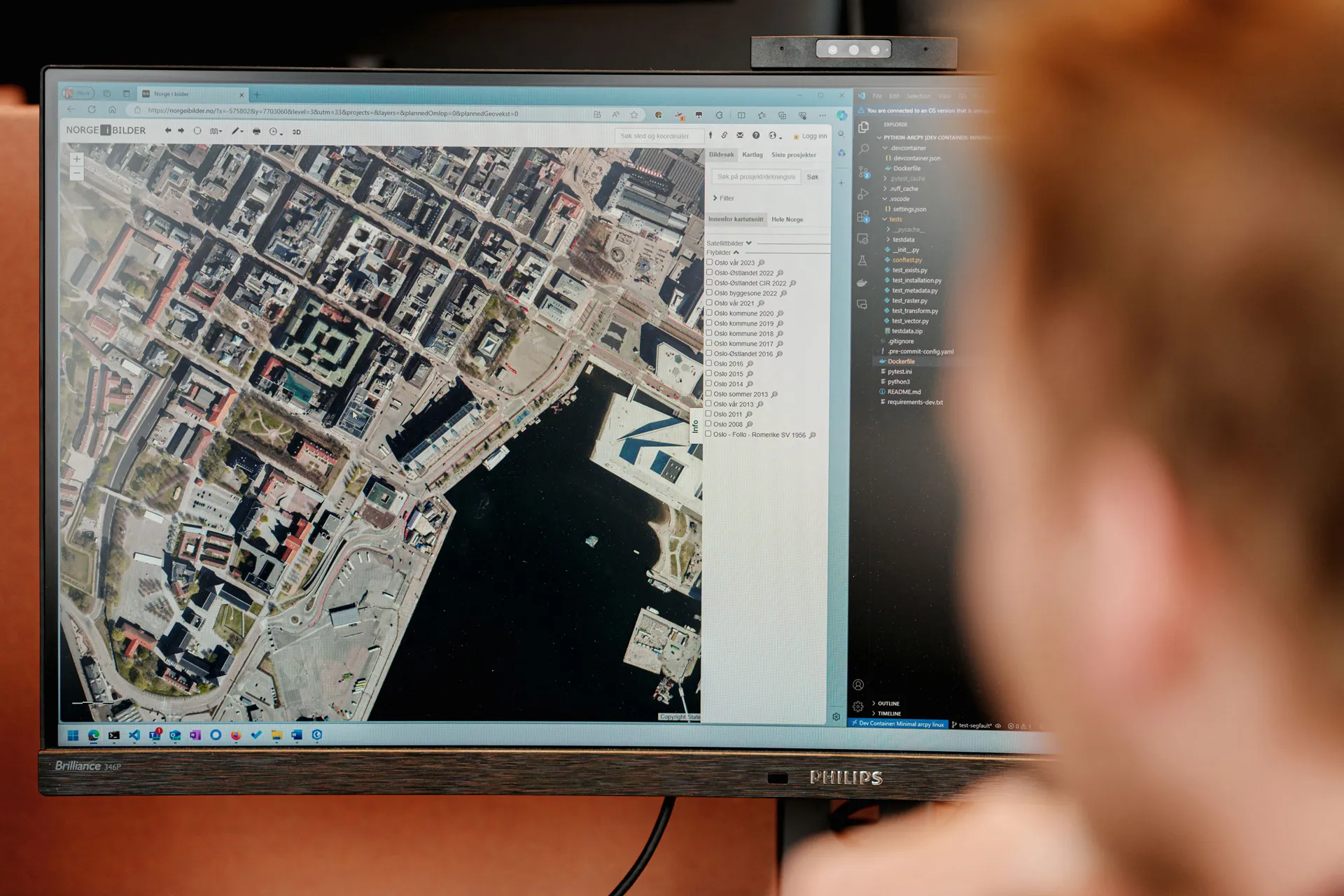

Effektiv drift og verdiskaping med GIS og Kubernetes

Som senior systemingeniør NIS/GIS hos Tensio, har Ove Marthinussen og hans kolleger fått erfare hvordan en solid GIS-plattform kan transformere driften og effektivisere ressursutnyttelsen i nettselskapet.

Kraftproduksjon og distribusjon

Utforsk hva vi kan levere

Produkter

Vi tilbyr verdensledende teknologi tilpasset norske behov med et bredt spekter av geografiske systemer fra de internasjonalt ledende ArcGIS produktene til egenutviklede løsninger og partnersamarbeid.

Tjenester

Vår konsulentavdeling har spesialkompetanse på geografiske informasjonssystemer, og hjelper våre kunder med alt fra korte oppdrag og analyser til store komplekse prosjekter over lengre tid.

Kurs og opplæring

Velg mellom en rekke fysiske klasseromskurs, digitale kurs og e-læringskurs. Se vår rikholdige kurskalender for kurs som passer kompetansebehovet for deg og din bedrift.